In this chapter, you will learn how to work with static files and serve them to the client correctly.

The application described in this chapter is not intended for use in production environments as-is. Note that successful completion of this entire guide is required to create a production-ready application.

Preparing the environment

If you haven’t prepared your environment during previous steps, please, do it using the instructions provided in the “Preparing the environment” chapter.

If your environment has stopped working or instructions in this chapter don’t work, please, refer to these hints:

Let’s launch Docker Desktop. It takes some time for this application to start Docker. If there are no errors during the startup process, check that Docker is running and is properly configured:

docker run hello-world

You will see the following output if the command completes successfully:

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

b8dfde127a29: Pull complete

Digest: sha256:9f6ad537c5132bcce57f7a0a20e317228d382c3cd61edae14650eec68b2b345c

Status: Downloaded newer image for hello-world:latest

Hello from Docker!

This message shows that your installation appears to be working correctly.

Should you have any problems, please refer to the Docker documentation.

Let’s launch the Docker Desktop application. It takes some time for the application to start Docker. If there are no errors during the startup process, then check that Docker is running and is properly configured:

docker run hello-world

You will see the following output if the command completes successfully:

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

b8dfde127a29: Pull complete

Digest: sha256:9f6ad537c5132bcce57f7a0a20e317228d382c3cd61edae14650eec68b2b345c

Status: Downloaded newer image for hello-world:latest

Hello from Docker!

This message shows that your installation appears to be working correctly.

Should you have any problems, please refer to the Docker documentation.

Start Docker:

sudo systemctl restart docker

Make sure that Docker is running:

sudo systemctl status docker

If the Docker start is successful, you will see the following output:

● docker.service - Docker Application Container Engine

Loaded: loaded (/lib/systemd/system/docker.service; enabled; vendor preset: enabled)

Active: active (running) since Thu 2021-06-24 13:05:17 MSK; 13s ago

TriggeredBy: ● docker.socket

Docs: https://docs.docker.com

Main PID: 2013888 (dockerd)

Tasks: 36

Memory: 100.3M

CGroup: /system.slice/docker.service

└─2013888 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

dockerd[2013888]: time="2021-06-24T13:05:16.936197880+03:00" level=warning msg="Your kernel does not support CPU realtime scheduler"

dockerd[2013888]: time="2021-06-24T13:05:16.936219851+03:00" level=warning msg="Your kernel does not support cgroup blkio weight"

dockerd[2013888]: time="2021-06-24T13:05:16.936224976+03:00" level=warning msg="Your kernel does not support cgroup blkio weight_device"

dockerd[2013888]: time="2021-06-24T13:05:16.936311001+03:00" level=info msg="Loading containers: start."

dockerd[2013888]: time="2021-06-24T13:05:17.119938367+03:00" level=info msg="Loading containers: done."

dockerd[2013888]: time="2021-06-24T13:05:17.134054120+03:00" level=info msg="Daemon has completed initialization"

systemd[1]: Started Docker Application Container Engine.

dockerd[2013888]: time="2021-06-24T13:05:17.148493957+03:00" level=info msg="API listen on /run/docker.sock"

Now let’s check if Docker is available and its configuration is correct:

docker run hello-world

You will see the following output if the command completes successfully:

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

b8dfde127a29: Pull complete

Digest: sha256:9f6ad537c5132bcce57f7a0a20e317228d382c3cd61edae14650eec68b2b345c

Status: Downloaded newer image for hello-world:latest

Hello from Docker!

This message shows that your installation appears to be working correctly.

Should you have any problems, please refer to the Docker documentation.

Let’s start the minikube cluster we have already configured in the “Preparing the environment” chapter:

minikube start

Set the default Namespace so that you don’t have to specify it every time you invoke kubectl:

kubectl config set-context minikube --namespace=werf-guide-app

You will see the following output if the command completes successfully:

😄 minikube v1.20.0 on Ubuntu 20.04

✨ Using the docker driver based on existing profile

👍 Starting control plane node minikube in cluster minikube

🚜 Pulling base image ...

🎉 minikube 1.21.0 is available! Download it: https://github.com/kubernetes/minikube/releases/tag/v1.21.0

💡 To disable this notice, run: 'minikube config set WantUpdateNotification false'

🔄 Restarting existing docker container for "minikube" ...

🐳 Preparing Kubernetes v1.20.2 on Docker 20.10.6 ...

🔎 Verifying Kubernetes components...

▪ Using image gcr.io/google_containers/kube-registry-proxy:0.4

▪ Using image k8s.gcr.io/ingress-nginx/controller:v0.44.0

▪ Using image registry:2.7.1

▪ Using image docker.io/jettech/kube-webhook-certgen:v1.5.1

▪ Using image docker.io/jettech/kube-webhook-certgen:v1.5.1

▪ Using image gcr.io/k8s-minikube/storage-provisioner:v5

🔎 Verifying registry addon...

🔎 Verifying ingress addon...

🌟 Enabled addons: storage-provisioner, registry, default-storageclass, ingress

🏄 Done! kubectl is now configured to use "minikube" cluster and "werf-guide-app" namespace by default

Make sure that the command output contains the following line:

Restarting existing docker container for "minikube"

Its absence means that a new minikube cluster was created instead of using the old one. In this case, repeat all the steps required to install the environment using minikube.

Now run the command in the background PowerShell terminal (do not close its window):

minikube tunnel --cleanup=true

Let’s start the minikube cluster we have already configured in the “Preparing the environment” chapter:

minikube start --namespace werf-guide-app

Set the default Namespace so that you don’t have to specify it every time you invoke kubectl:

kubectl config set-context minikube --namespace=werf-guide-app

You will see the following output if the command completes successfully:

😄 minikube v1.20.0 on Ubuntu 20.04

✨ Using the docker driver based on existing profile

👍 Starting control plane node minikube in cluster minikube

🚜 Pulling base image ...

🎉 minikube 1.21.0 is available! Download it: https://github.com/kubernetes/minikube/releases/tag/v1.21.0

💡 To disable this notice, run: 'minikube config set WantUpdateNotification false'

🔄 Restarting existing docker container for "minikube" ...

🐳 Preparing Kubernetes v1.20.2 on Docker 20.10.6 ...

🔎 Verifying Kubernetes components...

▪ Using image gcr.io/google_containers/kube-registry-proxy:0.4

▪ Using image k8s.gcr.io/ingress-nginx/controller:v0.44.0

▪ Using image registry:2.7.1

▪ Using image docker.io/jettech/kube-webhook-certgen:v1.5.1

▪ Using image docker.io/jettech/kube-webhook-certgen:v1.5.1

▪ Using image gcr.io/k8s-minikube/storage-provisioner:v5

🔎 Verifying registry addon...

🔎 Verifying ingress addon...

🌟 Enabled addons: storage-provisioner, registry, default-storageclass, ingress

🏄 Done! kubectl is now configured to use "minikube" cluster and "werf-guide-app" namespace by default

Make sure that the command output contains the following line:

Restarting existing docker container for "minikube"

Its absence means that a new minikube cluster was created instead of using the old one. In this case, repeat all the steps required to install the environment from scratch using minikube.

If you have inadvertently deleted Namespace of the application, you must run the following commands to proceed with the guide:

kubectl create namespace werf-guide-app

kubectl create secret docker-registry registrysecret \

--docker-server='https://index.docker.io/v1/' \

--docker-username='<Docker Hub username>' \

--docker-password='<Docker Hub password>'

You will see the following output if the command completes successfully:

namespace/werf-guide-app created

secret/registrysecret created

If nothing worked, repeat all the steps described in the “Preparing the environment” chapter and create a new environment from scratch. If creating an environment from scratch did not help either, please, tell us about your problem in our Telegram chat or create an issue on GitHub. We will be happy to help you!

Preparing the repository

Update the existing repository containing the application:

Run the following commands in PowerShell:

cd ~/werf-guide/app

# To see what changes we will make later in this chapter, let's replace all the application files

# in the repository with new, modified files containing the changes described below.

git rm -r .

cp -Recurse -Force ~/werf-guide/guides/examples/rails/030_assets/* .

git add .

git commit -m WIP

# Enter the command below to show the files we are going to change.

git show --stat

# Enter the command below to show the changes that will be made.

git show

Run the following commands in Bash:

cd ~/werf-guide/app

# To see what changes we will make later in this chapter, let's replace all the application files

# in the repository with new, modified files containing the changes described below.

git rm -r .

cp -rf ~/werf-guide/guides/examples/rails/030_assets/. .

git add .

git commit -m WIP

# Enter the command below to show files we are going to change.

git show --stat

# Enter the command below to show the changes that will be made.

git show

Doesn’t work? Try the instructions on the “I am just starting from this chapter” tab above.

Prepare a new repository with the application:

Run the following commands in PowerShell:

# Clone the example repository to ~/werf-guide/guides (if you have not cloned it yet).

if (-not (Test-Path ~/werf-guide/guides)) {

git clone https://github.com/werf/website $env:HOMEPATH/werf-guide/guides

}

# Copy the (unchanged) application files to ~/werf-guide/app.

rm -Recurse -Force ~/werf-guide/app

cp -Recurse -Force ~/werf-guide/guides/examples/rails/020_logging ~/werf-guide/app

# Make the ~/werf-guide/app directory a git repository.

cd ~/werf-guide/app

git init

git add .

git commit -m initial

# To see what changes we will make later in this chapter, let's replace all the application files

# in the repository with new, modified files containing the changes described below.

git rm -r .

cp -Recurse -Force ~/werf-guide/guides/examples/rails/030_assets/* .

git add .

git commit -m WIP

# Enter the command below to show the files we are going to change.

git show --stat

# Enter the command below to show the changes that will be made.

git show

Run the following commands in Bash:

# Clone the example repository to ~/werf-guide/guides (if you have not cloned it yet).

test -e ~/werf-guide/guides || git clone https://github.com/werf/website ~/werf-guide/guides

# Copy the (unchanged) application files to ~/werf-guide/app.

rm -rf ~/werf-guide/app

cp -rf ~/werf-guide/guides/examples/rails/020_logging ~/werf-guide/app

# Make the ~/werf-guide/app directory a git repository.

cd ~/werf-guide/app

git init

git add .

git commit -m initial

# To see what changes we will make later in this chapter, let's replace all the application files

# in the repository with new, modified files containing the changes described below.

git rm -r .

cp -rf ~/werf-guide/guides/examples/rails/030_assets/. .

git add .

git commit -m WIP

# Enter the command below to show files we are going to change.

git show --stat

# Enter the command below to show the changes that will be made.

git show

Adding an /image page to the application

Let’s add a new /image endpoint to our application. It will return a page with a set of static files. We will use Webpacker (instead of Sprockets) to bundle all JS, CSS, and media files.

Currently, our application provides a basic API with no means to manage static files and frontend code. To turn this API into a simple web application, we have generated a skeleton of the new Rails application (omitting the --api and --skip-javascript flags we used before):

rails new \

--skip-action-cable --skip-action-mailbox --skip-action-mailer --skip-action-text \

--skip-active-job --skip-active-record --skip-active-storage \

--skip-jbuilder --skip-turbolinks \

--skip-keeps --skip-listen --skip-bootsnap --skip-spring --skip-sprockets \

--skip-test --skip-system-test .

This skeleton includes all the functionality we need for managing JS code and static files. The following changes have been made to our application:

- Adding

webpackerto Gemfile. - Generating basic Webpacker configuration files:

- Creating a set of directories to host the source static files:

Now let’s add an HTML page template (available at /image) with the Get image button:

<!DOCTYPE html>

<html>

<head>

<title>werf-guide-app</title>

<meta name="viewport" content="width=device-width,initial-scale=1">

<%= csrf_meta_tags %>

<%= csp_meta_tag %>

<!-- Preloading CSS files. -->

<%= stylesheet_pack_tag 'application', media: 'all' %>

<!-- Preloading JS files. -->

<%= javascript_pack_tag 'application' %>

</head>

<body>

<div id="container">

<!-- The "Get image" button; it will generate an Ajax request when clicked. -->

<button type="button" id="show-image-btn">Get image</button>

</div>

</body>

</html>

Clicking the Get image button must result in an Ajax request that fetches and displays an SVG image:

window.onload=function(){

var btn = document.getElementById("show-image-btn")

btn.addEventListener("click", _ => {

fetch(require("images/werf-logo.svg"))

.then((data) => data.text())

.then((html) => {

const svgContainer = document.getElementById("container")

svgContainer.insertAdjacentHTML("beforeend", html)

var svg = svgContainer.getElementsByTagName("svg")[0]

svg.setAttribute("id", "image")

btn.remove()

}

)

}

)

}

Our page will also use the app/javascript/styles/site.css CSS file.

JS and CSS files as well as an SVG image will be bundled with Webpack and placed to /packs/...:

import Rails from "@rails/ujs"

import "src/image.js"

import "styles/site.css"

Rails.start()

Let’s update the controller and routes by adding a new /image endpoint that takes care of the HTML template created above:

class ApplicationController < ActionController::Base

def image

render template: "layouts/image"

end

def ping

render plain: "pong\n"

end

end

Rails.application.routes.draw do

get '/image', to: 'application#image'

get '/ping', to: 'application#ping'

end

The application now has a new endpoint called /image in addition to the /ping endpoint we made in previous chapters. This new endpoint displays a page that uses different types of static files.

The commands provided at the beginning of the chapter allow you to view a complete, exhaustive list of the changes made to the application in the current chapter.

Serving static files

By default, Rails does not even try to distribute static files in production environments. Instead, Rails developers suggest using a reverse proxy like NGINX for this task. This is because the reverse proxy distributes static files much more efficiently than Rails and Puma.

In practice, you can do without the reverse proxy running in front of an application only during development. In addition to efficiently serving static files, the reverse proxy nicely complements the application server (Puma) by providing many additional features not available at the application server itself. It also allows for fast and flexible request routing.

There are several ways to deploy the application server behind the reverse proxy in Kubernetes. We will use a popular and straightforward approach that nevertheless scales well. As part of it, the NGINX container is deployed into each Pod with the Rails/Puma container. This auxiliary container serves as a proxy for all Rails/Puma requests except for static file requests. These static files are served by the NGINX container.

Now, let’s get to the implementation.

Making changes to the build and deploy process

First of all, we have to make changes to the application build process. Now we need to build an NGINX image in addition to the Rails/Puma one. This NGINX image must have all the static files to serve to the client:

# The base image. It is used both for assembling assets and as the base for a backend image.

FROM ruby:2.7 as base

WORKDIR /app

# Copy the files needed to install the application dependencies into the image.

COPY Gemfile Gemfile.lock ./

# Install application dependencies.

RUN bundle install

#############################################################################

# A temporary image to build assets.

FROM base as assets

# Install Node.js.

RUN curl -fsSL https://deb.nodesource.com/setup_14.x | bash -

RUN apt-get update && apt-get install -y nodejs

# Install Yarn.

RUN npm install yarn --global

# Install JS application dependencies.

COPY yarn.lock package.json ./

RUN yarn install

# Copy all other application files into the image.

COPY . .

# Build assets.

RUN SECRET_KEY_BASE=NONE RAILS_ENV=production rails assets:precompile

#############################################################################

# The primary backend image with Rails and Puma.

FROM base as backend

# Copy all other application files into the image.

COPY . .

# Add an empty webpack manifest (no assets).

COPY --from=assets /app/public/packs/manifest.json /app/public/packs/manifest.json

#############################################################################

# Add an NGINX image with the pre-built assets.

FROM nginx:stable-alpine as frontend

WORKDIR /www

# Copy the pre-built assets from the above image.

COPY --from=assets /app/public /www

# Copy the NGINX configuration.

COPY .werf/nginx.conf /etc/nginx/nginx.conf

The NGINX config will be added to the NGINX image during the build:

user nginx;

worker_processes 1;

pid /run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

upstream backend {

server 127.0.0.1:3000 fail_timeout=0;

}

server {

listen 80;

server_name _;

root /www;

client_max_body_size 100M;

keepalive_timeout 10s;

# For the /packs path, assets are delivered directly from the NGINX container file system.

location /packs/ {

# Due to the nature of the Webpacker's bundling mechanism, the client can keep an asset cache

# for as long as necessary without worrying about invalidation.

expires 1y;

add_header Cache-Control public;

add_header Last-Modified "";

add_header ETag "";

# Serve pre-compressed files if possible (instead of compressing them on the fly).

gzip_static on;

access_log off;

try_files $uri =404;

}

# The media assets (pictures, etc.) will also be retrieved from the NGINX container file system,

# however, we will disable gzip compression for them.

location /packs/media {

expires 1y;

add_header Cache-Control public;

add_header Last-Modified "";

add_header ETag "";

access_log off;

try_files $uri =404;

}

# All requests, except for asset requests, are routed to the backend.

location / {

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header Host $http_host;

proxy_redirect off;

proxy_pass http://backend;

}

}

}

Let’s update werf.yaml so that werf can build two images (backend, frontend) instead of one:

project: werf-guide-app

configVersion: 1

---

image: backend

dockerfile: Dockerfile

target: backend

---

image: frontend

dockerfile: Dockerfile

target: frontend

Add a new NGINX container to the application’s Deployment:

apiVersion: apps/v1

kind: Deployment

metadata:

name: werf-guide-app

spec:

replicas: 1

selector:

matchLabels:

app: werf-guide-app

template:

metadata:

labels:

app: werf-guide-app

spec:

imagePullSecrets:

- name: registrysecret

containers:

- name: backend

image: {{ .Values.werf.image.backend }}

command: ["bundle", "exec", "rails", "server"]

ports:

- containerPort: 3000

env:

- name: RAILS_ENV

value: production

- name: frontend

image: {{ .Values.werf.image.frontend }}

ports:

- containerPort: 80

Services and Ingresses should now connect to port 80 instead of 3000 so that all requests are routed through the NGINX proxy instead of sending them directly to Rails/Puma:

apiVersion: v1

kind: Service

metadata:

name: werf-guide-app

spec:

selector:

app: werf-guide-app

ports:

- name: http

port: 80

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: werf-guide-app

spec:

rules:

- host: werf-guide-app.test

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: werf-guide-app

port:

number: 80

Checking that everything works as expected

Now we have to re-deploy our application:

werf converge --repo <DOCKER HUB USERNAME>/werf-guide-app

You should see the following output:

...

┌ ⛵ image backend

│ ┌ Building stage backend/dockerfile

│ │ backend/dockerfile Sending build context to Docker daemon 320kB

│ │ backend/dockerfile Step 1/24 : FROM ruby:2.7 as base

│ │ backend/dockerfile ---> 1faa5f2f8ca3

...

│ │ backend/dockerfile Step 24/24 : LABEL werf-version=v1.2.12+fix2

│ │ backend/dockerfile ---> Running in d6126083b530

│ │ backend/dockerfile Removing intermediate container d6126083b530

│ │ backend/dockerfile ---> 434a85e3df9d

│ │ backend/dockerfile Successfully built 434a85e3df9d

│ │ backend/dockerfile Successfully tagged 145074fa-9024-4bcb-9144-e245e1bbaf87:latest

│ │ ┌ Store stage into <DOCKER HUB USERNAME>/werf-guide-app

│ │ └ Store stage into <DOCKER HUB USERNAME>/werf-guide-app (14.27 seconds)

│ ├ Info

│ │ name: <DOCKER HUB USERNAME>/werf-guide-app:7d52fed109415751ad6f28dd259d7a201dc2ffe34863e70f0a404adf-1629129180941

│ │ id: 434a85e3df9d

│ │ created: 2022-08-16 18:53:00 +0000 UTC

│ │ size: 335.1 MiB

│ └ Building stage backend/dockerfile (23.70 seconds)

└ ⛵ image backend (30.13 seconds)

┌ ⛵ image frontend

│ ┌ Building stage frontend/dockerfile

│ │ frontend/dockerfile Sending build context to Docker daemon 320kB

│ │ frontend/dockerfile Step 1/28 : FROM ruby:2.7 as base

│ │ frontend/dockerfile ---> 1faa5f2f8ca3

...

│ │ frontend/dockerfile Step 28/28 : LABEL werf-version=v1.2.12+fix2

│ │ frontend/dockerfile ---> Running in 29323e1512c7

│ │ frontend/dockerfile Removing intermediate container 29323e1512c7

│ │ frontend/dockerfile ---> 8f637e588672

│ │ frontend/dockerfile Successfully built 8f637e588672

│ │ frontend/dockerfile Successfully tagged 215f9544-2637-4ecf-9b81-8cbbcd6777fe:latest

│ │ ┌ Store stage into <DOCKER HUB USERNAME>/werf-guide-app

│ │ └ Store stage into <DOCKER HUB USERNAME>/werf-guide-app (9.83 seconds)

│ ├ Info

│ │ name: <DOCKER HUB USERNAME>/werf-guide-app:bdb809c26315846927553a069f8d6fe72c80e33d2a599df9c6ed2be0-1629129180706

│ │ id: 8f637e588672

│ │ created: 2022-08-16 18:53:00 +0000 UTC

│ │ size: 9.4 MiB

│ └ Building stage frontend/dockerfile (19.02 seconds)

└ ⛵ image frontend (29.28 seconds)

┌ Waiting for release resources to become ready

│ ┌ Status progress

│ │ DEPLOYMENT REPLICAS AVAILABLE UP-TO-DATE

│ │ werf-guide-app 2/1 1 1

│ │ │ POD READY RESTARTS STATUS ---

│ │ ├── guide-app-566dbd7cdc-n8hxj 2/2 0 Running Waiting for: replicas 2->1

│ │ └── guide-app-c9f746755-2m98g 0/2 0 ContainerCreating

│ └ Status progress

│

│ ┌ Status progress

│ │ DEPLOYMENT REPLICAS AVAILABLE UP-TO-DATE

│ │ werf-guide-app 2->1/1 1 1

│ │ │ POD READY RESTARTS STATUS

│ │ ├── guide-app-566dbd7cdc-n8hxj 2/2 0 Running -> Terminating

│ │ └── guide-app-c9f746755-2m98g 2/2 0 ContainerCreating -> Running

│ └ Status progress

└ Waiting for release resources to become ready (9.11 seconds)

Release "werf-guide-app" has been upgraded. Happy Helming!

NAME: werf-guide-app

LAST DEPLOYED: Mon Aug 16 18:53:19 2022

NAMESPACE: werf-guide-app

STATUS: deployed

REVISION: 23

TEST SUITE: None

Running time 41.55 seconds

Before accessing the application, start the Minikube tunnel to expose the Ingress controller externally:

minikube tunnel

Keep this terminal running while you use the application. This command creates a network route on your machine to access the LoadBalancer service inside Minikube.

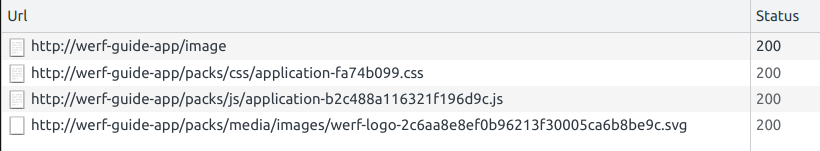

Go to http://werf-guide-app.test/image in your browser and click on the Get image button. You should see the following output:

Note which resources were requested and which links were used (the last resource here was retrieved via the Ajax request):

Now our application not only provides an API but boasts a set of tools to manage static and JavaScript files effectively.

Furthermore, our application can handle a high load since many requests for static files will not affect the operation of the application as a whole. Note that you can quickly scale Puma (serves dynamic content) and NGINX (serves static content) by increasing the number of replicas in the application’s Deployment.